Table of Contents

Statistics Q&A

Concise questions and answers for a variety of topics. Things you should probably know off the top of your head. Most of this is summarization of texts, papers, stackexchange, or other notes. However, I’ve lost direct references for many.

Basic Stats

What is statistics?

Statistics is the scientific discipline used for designing experiments, data collection, data analysis, and interpretation of analyses for the purpose of reasoning under uncertainty.

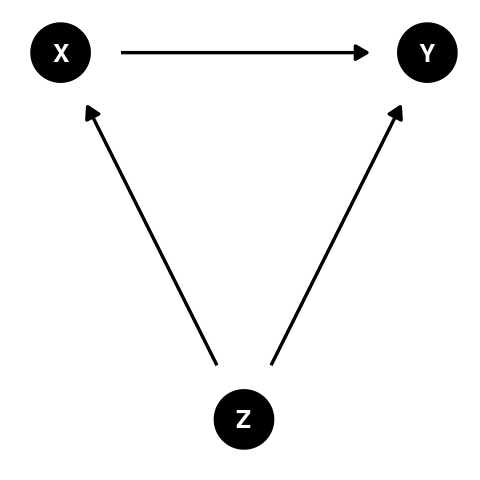

What’s the difference between association and causation?

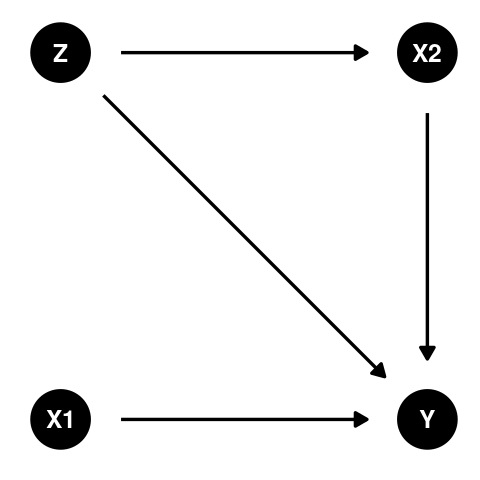

If two variables X and Y form some non-random pattern in their relationship, it can be said they are associated. However, this pattern could be the result of random chance or when there is a third variable Z which influences the previous two variables (in DAG form X←Z→Y). In addition, there may be no direction to this relationship, we can say that X is associated with Y and Y is associated with X.

If two variables X and Y have a causal association, then we can say that X influences Y when changing the state of X causes a change in the probability distribution over the values of Y. Unlike association, there is direction to this relationship. Causation doesn’t necessarily imply a deterministic relationship on an individual level, but does require a deterministic component at the population level (smoking → lung cancer).

- References

Explain the law of large numbers.

The law of large numbers shows that the sample mean converges to the population mean \(\mu\) as the sample size increases. This holds for iid observations from a distribution with finite expected value.

Borel’s law of large numbers states that if an experiment is repeated a large number of times, independently under identical conditions, then the proportion of times that any specified event occurs approximately equals the probability of the event’s occurrence on any particular trial.

Explain the central limit theorem.

The central limit theorem shows that when you sample iid observations from a distribution with finite variance the sampling distribution of the mean approaches a normal distribution.

\(\bar{x} \sim N\left( \mu, \left( \frac{\sigma}{\sqrt{n}} \right)^2 \right)\)

What is a p-value?

A p-value is the probability of how well the data we observed, or data more extreme than what we observed, fits with what was assumed under the null hypothesis and selected model. ~ \(P(data \mid \theta)\). In other words, if there was truly no difference in the population, how rare would it be to observe a sample with the calculated effect size or effect sizes even more extreme?

The p-value is a hypothetical probability derived from the test statistic’s sampling distribution. The sampling distribution is based on a hypothetical world in which the data collection and analysis method were repeated infinite times for the proposed hypothesis test. The resulting p-value is compared to the chosen type 1 error (\(\alpha\)) which is a threshold on the likelihood of the hypothetical repeated analyses to claim a significant result for a truly null comparison. If \(p \le \alpha\) we reject the null hypothesis. If \(p > \alpha\) we do not reject the null hypothesis.

The p-value is not the probability that the null hypothesis is true, and is not the probability that the results are due to chance (rather the p-value may be calculated given the assumption that the results are due to chance).

If p>0.05 should you fail to reject the null hypothesis?

Not necessarily. A large p-value simply flags the data as being not unusual if all the assumptions used to compute it (including the test hypothesis) were correct. If there were violations of those assumptions then we would expect a higher chance of incorrectly concluding to not reject the null hypothesis (false negative).

If p≤0.05 should you reject the null hypothesis?

Not necessarily. A small p-value simply flags the data as being unusual if all the assumptions used to compute it (including the test hypothesis) were correct. If there were violations of those assumptions then we would expect a higher chance of incorrectly concluding to reject the null hypothesis (false positive).

If p>0.05 does it show evidence for the null hypothesis?

No. A large p-value often indicates only that the data are incapable of discriminating among many competing hypotheses (as would be seen immediately by examining the range of the confidence interval).

If p≤0.05 does this mean the chance you’ve made a false positive conclusion is 5%?

No. If you reject the null hypothesis when it is actually true, then the chance you’ve made a false positive error is 100%. The 5% refers only to how often you would reject it, and therefore be in error, over very many uses of the test across different studies when the test hypothesis and all other assumptions used for the test are true. This statement doesn’t apply to a single test.

Statistical significance is a property of the phenomenon being studied, and thus statistical tests detect significance.

This misinterpretation is promoted when researchers state that they have or have not found “evidence of” a statistically significant effect. The effect being tested either exists or does not exist. “Statistical significance” is a dichotomous description of a p-value (that it is below the chosen cut-off) and thus is a property of a result of a study design and the statistical test; it is not a property of the effect or population being studied. In summary, significance is an attribute of the study, not of the effect.

Explain the 95% confidence interval.

Easy to understand: The confidence interval contains all values of the population statistic that we could reasonably believe to be compatible with the sample data.

Accurate: If the data collection and analysis method are valid, and repeated many times, then 95% of repeated experiments would result in a confidence interval that includes the population parameter.

The observed 95% CI has a 95% chance of containing the true effect size.

No. The frequency that an observed interval contains the true effect is either 100% if the true effect is within the interval or 0% if not. The 95% refers only to the situation if you replicated the exact same study design an infinite amount of times, then 95% of those confidence intervals would contain the true effect size if all assumptions used to compute the intervals were correct.

If two CIs overlap, the difference between two estimates or studies is not significant.

No. The 95% confidence intervals from two subgroups or studies may overlap substantially and yet the test for difference between them may still produce P < 0.05. Suppose for example, two 95% confidence intervals for means from normal populations with known variances are (1.04, 4.96) and (4.16, 19.84); these intervals overlap, yet the test of the hypothesis of no difference in effect across studies gives P = 0.03. As with p-values, comparison between groups requires statistics that directly test and estimate the differences across groups. It can, however, be noted that if the two 95% confidence intervals fail to overlap, then when using the same assumptions used to compute the confidence intervals we will find P < 0.05 for the difference; and if one of the 95% intervals contains the point estimate from the other group or study, we will find P > 0.05 for the difference.

What is type 1 error?

The probability of rejecting a true null hypothesis. AKA false positive.

\(\alpha\)

What is type 2 error?

The probability of failing to reject a false null hypothesis. AKA false negative.

\(\beta\)

When do you need to adjust for multiple comparisons?

When you test multiple hypothesis using frequentist methods, want to control for possibly increasing number of false positive results, and can allow for increased proportion of false negatives.

What is the probability of at least one false positive among multiple independent hypothesis tests?

If n independent hypothesis are tested and each of the null hypothesis are actually true, then the probability that at least one of them will be rejected is \(1 - (1 - \alpha)^n\).

For example, when \(\alpha\)=0.05 and n=20, then \(P(\geq 1 \text{ false positive})=0.64\).

The probability of not making any false rejections in a set of \(n\) tests is \((1 - \alpha)^n\).

What’s the difference between a p-value and an FDR adjusted p-value?

p-value

If the data collection and analysis method were repeated many times for a single test, we expect no more than \(\alpha\) of the hypothetical repeated analyses to claim a significant result for a truly null comparison.

False discovery rate (FDR) adjusted p-value

Among a set of independent hypothesis tests that are significant at the chosen \(\alpha\) level, we expect no more than \(\alpha\) of them to be falsely significant. For example, if \(\alpha = 0.01\), we expect that 1% of the tests with FDR adjusted p-value as small or smaller to be false positives.

To calculate an FDR adjusted p-value: 1) order all p-values from smallest to largest, 2) multiply each p-value by the total number of tests, 3) divide this value by the rank order of the p-value, 4) make sure this sequence of transformed p-values is non-decreasing by setting the preceding transformed p-value to the smaller value (repeatedly until the condition is satisfied), and 5) set transformed p-values greater than 1 to 1.

The FDR adjusted p-value and q-value are not the same thing. The FDR adjusted p-value controls the expected FDR and guarantees that the true FDR rate will be less than the specified rate on average over repeated data collection and analyses. The FDR adjusted p-value based on the BH approach is slightly more conservative than the q-value, but holds regardless of the number of tests, whereas the q-value is an asymptotic value for providing an unbiased estimate of the FDR.

Note that the FDR adjusted p-value is one way to control/define type 1 error rates under multiple hypothesis testing. The distinction between the common methods [1]:

Null True Alternative True Total Not Significant U T \(m\)-R Significant V S R Total \(m_0\) \(m-m_0\) \(m\) Where a set of \(m\) hypothesis are tested, \(m_0\) is the number of true null hypothesis, and R is the number of rejected null hypothesis.

- Per comparison error rate (PCER)

- The expected value of the number of Type 1 errors over the number of hypotheses

- \(\frac{E(V)}{m}\)

- Per-family error rate (PFER)

- The expected number of Type 1 errors in a family of hypotheses

- \(E(V)\)

- Family-wise error rate (FWER)

- The probability of at least one type 1 error

- \(P(V \geq 1)\)

- False discovery rate (FDR)

- The expected proportion of Type 1 errors among the rejected hypotheses

- \(E \left(\frac{V}{R} \mid R>0\right)P(R>0)\)

- Positive false discovery rate (pFDR)

- The rate that discoveries are false

- \(E \left(\frac{V}{R} \mid R>0\right)\)

What is power?

The power of a test to detect a correct alternative hypothesis is the pre-study probability that the test will reject the null hypothesis (e.g., the probability the p-value will not exceed a pre-specified cut-off such as 0.05). The corresponding pre-study probability of failing to reject the test hypothesis when the alternative is correct is one minus the power, also known as the Type-II or beta error rate. As with p-values and confidence intervals, this probability is defined over repetitions of the same study design and so is a frequency probability.

Stated concisely, power is the probability of correctly rejecting the null hypothesis, given that the alternative hypothesis is true.

What information is needed for power analysis and sample size calculation?

- Verify the question of interest and the variables/information needed for analysis of this question.

- Verify you are able to collect a representative sample for the question of interest.

- Check if there is previously published data for the question of interest.

- Determine the alpha level required.

- Determine the minimum relevant effect size.

- If the outcome is continuous, determine the population standard deviation. If the outcome is binary, determine the probability of the event in the control arm.

Standardized effect sizes are nice as they remove the need to specify variance. Raw effect sizes are easier to visualize and interpret.

Is it ok to calculate retrospective power?

Very rarely. Retrospective power analyses can be conducted by 4 methods:

- Using both the observed effect size and variance.

- Issues:

- Both the p-value and power are dependent upon the observed effect size and so are inversely related such that tests with high p-values tend to have low power and visa-versa. Therefore calculating power using the observed effect size and variance is simply a way of re-stating the statistical significance of the test. In addition, this method will result in an over-estimate of the true power and the point estimates are often imprecise. The estimate of the observed statistical power is not meaningful because there is no way to be sure that the effect size estimate from the study is the true parameter.

- Issues:

- Only the observed variance

- Benefits:

- Using observed variances but not observed effect sizes is helpful because it allows one to evaluate whether the sample size and alpha level were sufficient to have a good chance of detecting a biologically significant effect given the observed level of variation. You may report power over a range of effect sizes or determine the minimum effect size detectable for a given power.

- Benefits:

- Neither the observed effect size nor variance

- Issues:

- Using standardized effect sizes avoids the need to specify sampling variances, so we only use the sample size and alpha level from the study. The result will provide information to evaluate the study design, but makes it much harder to assess biological significance.

- Issues:

- Avoided completely by computing confidence intervals about the observed effect size.

- Issues:

- This method is only relevant before the results of the hypothesis test are known.

- Issues:

Since the goal is often to simply quantify the uncertainty in the findings of a study, calculating confidence intervals are more appropriate than retrospective power. If the goal is to evaluate the ability of a study to detect a biologically meaningful pattern, then retrospective power might be useful. Calculating power using pre-specified effect sizes (or calculating detectable effect size using pre-specified power) is helpful, especially if easily interpreted raw effect size measures are used. Standardized measures may be useful in more complex tests (such as tests for interaction in multi-way ANOVA) where it is hard to specify an intuitive raw measure of effect size. In these cases power analysis may be performed using conventional levels of effect size, such as those proposed by Cohen (1988). All power calculations should be accompanied by a sensitivity analysis. For power calculations that use assumed values for the effect size or variance, this means using a range of plausible values for each variable. Graphs showing how two or more variables interact with one another are particularly valuable. For power calculations that use values estimated from sample data (such as sampling variance), a confidence interval about the power estimate should be given.

References:

- Using both the observed effect size and variance.

What’s the difference between incidence and prevalence?

Prevalence refers to the number of subjects with the outcome of interest divided by the total number of subjects. It is calculated at a single moment in calendar time or based on a specific moment in time that each subject would experience.

\[\frac{\text{Number of subjects with outcome at specific time}}{\text{Total number of subjects}}\]

Period prevalence is a modification of prevalence that counts all cases which occur during a specific duration of time. It counts all outcomes of interest at the begining time period and the cases which develop within the specified time period. You might refer to the result as a ‘1-month period prevalence’ or ‘1-year period prevalence’

\[\frac{\text{Number of subjects with outcome during time period}}{\text{Total number of subjects}}\]

Incidence refers to the number of subjects which develop the outcome of interest in a fixed time period divided by the total number of subjects at risk of developing the outcome. Subjects already with the outcome of interest are not included in the numerator or denominator. Since follow-up duration may be different between subjects, you can compute e.g. subject-months or subjects-years instead of number of subjects in the denominator.

\[\frac{\text{Number of subjects who developed outcome during time period}}{\text{Total number of subjects at risk of developing outcome}}\]

References:

What is selection bias?

Selection bias occurs when the sampled data has characteristics that are not representative of the population you wish to make make inference on. The consequence of selection buas is that the estimated association between the exposure and outcome differs from the true association among the population.

Some specific cases of this bias occur from inappropriate selection of controls in case-control studies, bias resulting from differential loss-to-follow up, incidence–prevalence bias, volunteer bias, healthy-worker bias, and nonresponse bias.

References:

What is the mean?

A measure of central tendency for a numeric variable.

\[ \bar{y} = \frac{\sum_{i=1}^{n} y_i}{n} \]

What are variance and standard deviation?

- Variance: Dispersion of a variable in squared units

- Standard deviation: Disperion of a variable in original units. The average distance of each value from the mean.

\[ Var(y) = \frac{\sum_{i=1}^{n} (y_i - \bar{y})^2}{n-1} \]

\[ SD(y) = \sqrt{Var(y)} \]

What is the standard error?

The standard error is the standard deviation of a statistics sampling distribution.

- For single Mean: \(se = \frac{sd}{\sqrt{n}}\)

- Difference of means (equal variance): \(se = \sqrt{sd^2 \left( \frac{1}{n_1} + \frac{1}{n_2} \right)}\)

- Difference of means (unequal variance): \(se = \sqrt{\left( \frac{sd^2_1}{n_1} + \frac{sd^2_2}{n_2} \right)}\)

What is covariance?

How two variables vary linearly together in the original units. A positive value means that they move linearly in the same direction. A negative value means they move linearly in the opposite direction. A value of zero means they are not linearly related.

\[\begin{equation} \begin{aligned} Cov(X, Y) &= E[(X - \mu_{X})(Y - \mu_{Y})] \\ &= E(XY) - E(X)E(Y) \\ &= \frac{\sum_{i=1}^{n} [(x - \bar{x}) (y - \bar{y})]}{n-1} \end{aligned} \end{equation}\]

What is Pearson’s correlation coefficient?

The standardized covariance between two numeric variables: ranges from -1 to 1. A measure of strength as to how the paired values fit a line.

\[\begin{equation} \begin{aligned} \rho_{X, Y} &= \frac{Cov(X, Y)}{\sigma_X \sigma_Y} \\ &= \frac{E[(X - \mu_{X})(Y - \mu_{Y})]}{\sigma_X \sigma_Y} \\ &= \frac{E(XY) - E(X)E(Y)}{\sqrt{E(X^2)-(E(X))^2}\sqrt{E(Y^2)-(E(Y))^2}} \\ &= \frac{\sum_{i=1}^{n}(x_i - \bar{x})(y_i - \bar{y})}{\sqrt{\sum_{i=1}^{n} (x_i - \bar{x})^2}\sqrt{\sum_{i=1}^{n} (y_i - \bar{y})^2}} \end{aligned} \end{equation}\]

Paradoxes, Phenomena, and Fallacies

What is Simpson’s paradox?

This paradox was described in detail by Edward Simpson in 1951. It occurs when the relationship between two categorical variables reverses (or is diminished or enhanced) when you condition on a third categorical variable. Consider the situation where, as a whole, the control group was more likely to get sick than those who took a drug \((\frac{13}{60} = 0.22 > \frac{11}{60} = 0.18)\). However, within each gender, the control group had a smaller chance of getting sick than those who took the drug: \((\frac{1}{20} = 0.05 < \frac{3}{40} = 0.07 \text{ and } \frac{12}{40} = 0.3 < \frac{8}{20} = 0.4)\). This reversal in relative frequency is because of the mathematical property that if \(\frac{A}{B} > \frac{a}{b} \text{ and } \frac{C}{D} > \frac{c}{d}\) does not imply that \(\frac{A + C}{B + D} > \frac{a + c}{b + d}\). In this case \(\frac{3}{40} > \frac{1}{20}\) and \(\frac{8}{20} > \frac{12}{40}\) but \(\frac{3 + 8}{40 + 20} < \frac{1 + 12}{20 + 40}\).

Both the control and drug groups had 60 people total. However, the control group had 40 men while the drug group had 20 men, and men were overall more likely to get sick \((\frac{20}{60} = 0.33 > \frac{4}{60} = 0.07)\). Looking at the aggregated data hides this fact and results in Simpson’s paradox.

control

drug

sick

healthy

total

sick

healthy

total

female

1

19

20

3

37

40

male

12

28

40

8

12

20

total

13

47

60

11

49

60

You might think this means the conditional analysis is always the correct choice, however, the only way to formally decide is to draw the relevant causal networks, decide on which variables are confounding, and make those assumptions known when reporting the results. Choosing the correct way to analyze data is not based on naively conditioning on every variable measured, but rather is extracted from the context of the question, the data generating process, and design of experiment.

References:

What is Lord’s paradox?

This paradox was described in detail by Frederic Lord in 1967. It occurs when the relationship between two variables (one categorical and one continuous) reverses (or is diminished or enhanced) when you condition on a third continuous variable. The usual example for the third variable is a baseline measurement, while the outcome (response) is the final measurement. There are two options for analysis

- An unconditioned test on the change score

- A conditioned test on the final outcome.

A t-test on the change score, \(y - x2\), provides a summary for the total effect (direct effect of x1 plus indirect effects through all others paths), while an ANCOVA with baseline as a covariate provides the direct effect of x1.

In a pre-post design, if differences between treatment groups at baseline are expected to be zero (such as in a RCT), then a baseline adjusted model and change score model are expected to give the same result. If differences between the two treatment groups are expected, then the two methods are expected to give different results. Thus, the researcher needs to choose the causal mediation effect of interest in order to have a meaningful interpretation.

References:

What is Suppression?

Suppression occurs when the relationship between two continuous variables reverses (or is diminished or enhanced) when you condition on a third continuous variable. The general case is observed when an explanatory variable that is unrelated to the response variable increases the fit of a model. Suppose a model has continuous response y and continuous explanatory x1 and x2. If \(corr(y, x1) = 0\) and \(corr(y, x2) > 0\) and \(corr(x1, x2) > 0\) then including x1 can suppress the part of x2 that is uncorrelated with y. The coefficient for x2 can increase, \(R^2\) can increase, and the coefficient for x1 will be less than zero. In general, suppression is seen when controlling for a ‘third’ variable that is termed a confounder (although this confounder can only be interpreted based on a causal diagram in context of the question).

References:

What is Berkson’s paradox?

In 1946, Joseph Berkson, a biostatistician at the Mayo Clinic, noticed that even if two diseases have no relation to each other in the general population, they can be associated among hospital patients. This can result from a situation where neither of the two diseases by themselves cause hospitalization, but together they do. So conditioning on hospitalization created a spurious association between the two diseases. Determining this effect is hard, and it’s important to create causal diagrams to illustrate the potential confounding paths. This often arises from convenience samples or other sampling bias.

What is Will Roger’s phenomenon?

This phenomenon is attributed (maybe incorrectly) to comedian Will Rogers with the following quote:

“When the Okies left Oklahoma and moved to California, they raised the average intelligence level in both states.”

This is more generally referred to as stage migration. For example, improved detection of illness leads to an increased number of unhealthy people which can result in a increase in life span for both groups even if no improvement in treatment is seen. This is a potential explanation for the increase in cancer survival times. In summary, you may wrongly attribute to treatment effects and should be conditioning on diagnostic criteria.

What is regression to the mean?

In 1885, Francis Galton published a paper on the height of parents and their children. He found that parents on the extreme ends of the height spectrum tend to have children whose height is closer to the average. This effect causes issues when selection for measurement is based on some criterion (above or below a certain value) from an initial measure. Consider the situation where you measure blood pressure for 1000 patients and 285 had DBP values greater than 95mmHg. Instead of following up with all 1000 patients, you only called back the 285 and find that their mean DBP value has decreased. In this situation you would not be able to separate the effect of a drug or other intervention from regression to the mean. To see the effect of an intervention, you would need to randomly assign half of the 285 to a control group and half to a treatment group. Then both groups would have the same regression to the mean improvement and any differences could be attributed to the treatment.

References:

What is Stein’s paradox?

This paradox was described in detail by Charles Stein in 1955 [1] and later refined with Willard James in 1961 [2]. Suppose \(X_1, X_2, ..., X_k\) are independently normal random variables such that \(X_i \sim N(\theta, I)\) where \(\theta = \theta_1, \theta_2, ..., \theta_k\) and \(I\) is the \(k \times k\) identity matrix. It turns out that the best estimator for \(\hat{\theta}_i\) is not \(\bar{x}_i\), but rather \(\hat{\theta}^\prime_i = \bar{\bar{x}} + c(\bar{x}_i - \bar{\bar{x}})\) where \(\bar{\bar{x}}\) is the grand mean and \(c = 1 - \frac{(k-3)\sigma^2}{\sum(\bar{x}_i - \bar{\bar{x}})^2}\) is a constant. It’s possible for \(c\) to be negative, thus should be replaced with 0 for these cases to produce better estimations.

This estimator is not intuitive as it requires combining information from independent populations to produce a more optimal estimator of each population’s mean. The James-Stein estimator is similar to that of Bayes’s equation and is often referred to as empirical Bayes estimator (closer in likeness as \(k\) increases). In the Bayes perspective, the independent samples are joined through the common prior for \(\theta\) and the resulting pooled estimate is similar to James-Stein.

As described in [3], shrinkage is only possible when \(K \ge 3\). The intuition for this can be seen using linear regression. When there are only one or two observations, the linear regression line must pass through those exact points. When there are three or more observations, the regression line represents the James-Stein estimator.

Quoting from Efron and Morris [4]:

What do these equations mean in terms of the behavior of the estimator? In effect the James-Stein procedure makes a preliminary guess that all the unobservable means are near the grand mean (\(\bar{\bar{x}}\)). If the data support that guess in the sense that the sample means are themselves not too far from \(\bar{\bar{x}}\), then the estimates are all shrunk further toward the grand mean. If the guess is contradicted, then not much shrinking is done. These adjustments to the shrinking factor are accomplished through the effect the distribution of means around the grand mean has on the equation that determines c. The number of means being estimated also influences the shrinking factor, through the term (k - 3) appearing in this same equation. If there are many means, the equation allows the shrinking to be more drastic, since it is then less likely that variations observed represent mere random fluctuations. …

The James-Stein estimator does substantially better than the sample means only if the true means lie near each other, so that the initial guess involved in the technique is confirmed. What is surprising is that the estimator does at least marginally better no matter what the true means are.

- [1] https://projecteuclid.org/ebook/Download?urlid=bsmsp%2F1200501656&isFullBook=False

- [2] https://projecteuclid.org/ebook/Download?urlid=bsmsp%2F1200512173&isFullBook=False

- [3] https://projecteuclid.org/journals/statistical-science/volume-5/issue-1/The-1988-Neyman-Memorial-Lecture--A-Galtonian-Perspective-on/10.1214/ss/1177012274.full

- [4] https://doi.org/10.1038/scientificamerican0577-119

What is the Jeffreys-Lindley paradox?

This paradox was discussed by Harold Jeffreys in 1939 [1] and later by Dennis Lindley in 1957 [2]. It centers around the opposition between the frequentist and Bayesian schools of inference in terms of decision rules. This can be seen when the decision to reject or not reject the null hypothesis using a t-test may lead to different conclusions from the comparable Bayesian procedure using Bayes factors (which are a measure of evidence brought by the data in favor of the null hypothesis relative to the alternative) [3]. David Colquhoun [4] reframes the paradox using false positive risk (FPR). FPR is the probability that a result which is “significant” at a specified p-value is a false positive result. Thus, if p=0.05, the FPR actually increases as the sample size increases. In other words, p=0.05 provides strong evidence for the null hypothesis at large sample sizes. So, in situations with very high power to detect an effect, p-values close to traditional cut-offs don’t necessarily provide the correct decision?

What is the absence of evidence fallacy?

The absence of evidence fallacy (argument from ignorance, appeal to ignorance) is a formal logical fallacy. It is explained in the statistical perspective by Douglas Altman and Martin Bland [1] in their note, “Absence of evidence is not evidence of absence”. In other words, failing to reject the null hypothesis for a test of difference does not imply evidence for no difference. All that has been shown is an absence of evidence of a difference, nothing more.

What is the ecological fallacy?

The ecological fallacy occurs when statistical analyses are made on aggregated group-level data, but interpretations are applied to the individuals who made up those groups. The erroneous results are often due to uncontrolled confounding? Ecological fallacies are often seen in misinterpretations of meta-analysis.

What is the prosecutor’s fallacy?

This fallacy also goes by the names base-rate fallacy, inverse fallacy, or transposed conditional error. Using legal terms, it’s making the error that \[P(\text{innocent} \mid \text{evidence}) = P(\text{evidence} \mid \text{innocent}),\] when in reality \[P(\text{innocent} \mid \text{evidence}) = \frac{P(\text{innocent})P(\text{evidence} \mid \text{innocent})}{P(\text{evidence})}\] Or, in general terms, making the error that \[P(\text{Data} \mid H_0) = P(H_0 \mid \text{Data}).\]

The simplest example can be seen as \(P(\text{Doctor} \mid \text{Surgeon}) = 1\), however \(P(\text{Surgeon} \mid \text{Doctor})<1\).

Errors may arise in the medical setting when the prevalence of a disease is ignored while interpreting diagnostic results. Suppose the false positive rate of a medical test is 1/100 (1 chance in a 100 that the test says positive in cases that are actually negative) and the prevalence in the population is 1/1000. This means that with no other evidence to predispose you to having the disease, you have 10:1 odds that the test was incorrect.

As another example, suppose the prevalence of a disease (D) is 1/1000. The sensitivity of a test (probability the test is positive given the person has the disease) is 0.8 and the specificity (probability the test is negative given the person does not have the disease (H)) is 0.8. Assuming a person was tested at random with no other evidence suggesting disease:

\[\begin{aligned} P(D \mid +) &= \frac{P(+ \mid D)P(D)}{P(+)} \\ & = \frac{P(+ \mid D)P(D)}{P(+ \mid D)P(D) + P(+ \mid H)P(H)} \\ & = \frac{P(+ \mid D)P(D)}{P(+ \mid D)P(D) + (1 - P(- \mid H))P(H)} \\ & = \frac{0.8 \times 0.001}{0.8 \times 0.001 + 0.2 \times 0.999} \\ & = 0.004 \end{aligned}\]

So our knowledge has increased from the baseline probability of disease as 0.001 to the new probability of disease as 0.004.

What is the gambler’s fallacy?

The gamblers fallacy (AKA hot hand fallacy) is the belief that prior outcomes from a series of random events affect the probability of a future outcome.

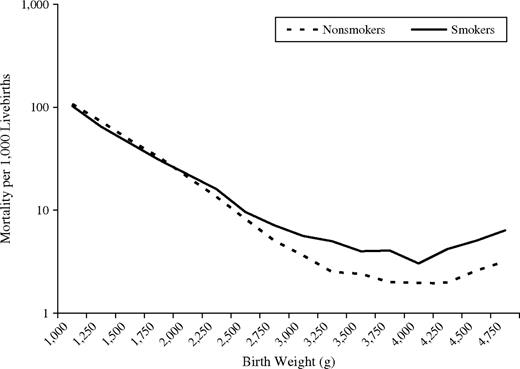

What is the low birth weight paradox?

Birth weight is a predictor of infant mortality. For this reason, and because birth weight data are readily available, investigators have frequently conditioned on birth weight when evaluating the effect of infant mortality. This often produces a crossover of the birth weight specific mortality curves. LBW infants in groups with a high prevalence of LBW have a lower mortality rate than LBW infants in groups with a low prevalence of LBW, whereas the opposite is true for normal-weight infants. This phenomenon is known as the “birth weight paradox”. Miguel Hernan et al. [1] used causal diagrams to show that this apparent paradox can be conceptualized as selection bias due to stratification on a variable (birth weight) that is affected by the exposure of interest (smoking) and that shares common causes with the outcome (infant mortality) (see also, collider bias).

What is the table 2 fallacy?

The table 2 fallacy was highlighted by Daniel Westreich and Sander Greenland [1].

It’s common in medical studies for table 1 to be a table of demographic, social, and clinical characteristics of the study groups, often categorized by levels of exposure (treatment). Table 2 is commonly a table of multivariable regression results. The multivariable model contains effect estimates for both the primary estimate of interest (exposure) and for secondary risk factors or confounders. By presenting adjusted effect estimates for secondary risk factors alongside the adjusted effect estimate for the primary exposure, Table 2 suggests implicitly that all of these estimates can be interpreted similarly, if not identically. This is often not the case. They can only be interpreted after defining the causal diagram (DAG) and if variables may summarize the primary effect, secondary effect, total effect, direct effect, and other factors in effect interpretability.

- Primary effect: The causal effect of an exposure of primary interest.

- Secondary effect: The causal effect of a covariate not of primary interest in the initial adjustment model (e.g., a confounder or effect-measure modifier).

- Total effect: The net of all associations of a variable through all causal pathways to the outcome.

- Direct effect: An association after blocking or controlling some of those pathways.

What is the Hauck-Donner effect?

The Hauck-Donner effect occurs when the log-likelihood surface is non-quadratic (such as when the sampling distribution of a parameter is not normal) and extremely large parameter estimates near the parameter space boundary are given as a result. Hauck and Donner (1977) [1] showed that the Wald test statistic does not monotonically increase as the distance between the parameter estimate and null value increases. This results in the Wald test having low power leading to nonsignificance of a potentially truly large effect.

This effect is usually framed in the context of logistic regression, but can occur in any GLM. The likelihood ratio test may be used instead of the Wald test since it only assumes a weaker asymptotic assumption that the LR statistic is proportional to a \(\chi^2_r\) distribution.

What is Freedman’s paradox?

Freedman’s paradox is based on an observation by David Freedman in 1983 [1] on how variable selection can lead to erroneous significance. For example, if a regression model is fit with many parameters then refit again on only the parameters that were significant, then the overall F-test will be incorrectly inflated.

This issue is representative of the overall issue with traditional stepwise model selection. Confidence intervals and p-values for parameter estimates are too small and the illusion of confirmatory results for model structure is promoted.

Other methods and ideas to avoid.

Commonly used methods and ideas that are statistically unsound, suboptimal, or just unnecessary for most cases. Many of these items were from Stephen Senn, Frank Harrell, https://discourse.datamethods.org/t/reference-collection-to-push-back-against-common-statistical-myths/1787, and https://ard.bmj.com/content/74/2/323.

- Do not dichotomize ordered/continuous variables.

- Dichotomizing continuous variables results in loss of information and assumes the effect on the response is a step function which only changes at the cut-point.

- https://doi.org/10.3174/ajnr.A2425

- https://doi.org/10.1002/sim.2331

- https://doi.org/10.1186/s12874-019-0667-2

- https://doi.org/10.1136/bmj.332.7549.1080

- https://doi.org/10.1093/jnci/86.11.829

- Do not use change from baseline to measure difference.

- In a baseline adjusted model, if the slope for the baseline covariate is equal to one, then the model is identical to the change score analysis. If the slope for the baseline covariate is zero, then the model is identical to ignoring the baseline value.

- Some argue that baseline adjusted models should not be used in observational data (where an exposure could be present before the baseline score was measured). See Lord’s paradox for more information.

- https://doi.org/10.1002/sim.4780111304

- https://doi.org/10.1136/bmj.323.7321.1123

- https://doi.org/10.1186/1745-6215-12-264

- https://www.fharrell.com/post/errmed/#change

- https://doi.org/10.1161/strokeaha.121.034859

- https://doi.org/10.1093/aje/kwi187

- https://content.sph.harvard.edu/fitzmaur/ala2e/

- page 126

- Do not use responder analysis.

- Do not use minimization.

- Do not use propensity scores.

- Matching is probably the worst offender here, but other flavors are unnecessary as well. Senn writes, “I like to start by comparing completely randomised and matched pair designs. In both cases the PS is 1/2. However to analyse the latter as if it were the former is regarded as an elementary error. However the point estimate is the same so what’s the problem? The problem is the standard error is different. Much confusion in discussing analysis and design arises from assuming the point estimate is the goal.”, … “What matters is whether something is predictive of outcome not of assignment.”

- https://doi.org/10.1002/sim.3133

- https://doi.org/10.1016/j.jacc.2016.10.060

- https://doi.org/10.1017/pan.2019.11

- https://www.youtube.com/watch?v=rBv39pK1iEs

- Do not use split plot analysis of repeated measures.

- Do not use sigma-divided measures.

- Examples using Cohen’s d

- John Myles White writes, “Also this is a good time for my regular reminder that Cohen’s d is only useful for power analysis. If a medical treatment lets me live one year longer, increasing the population-level variation in lifespans doesn’t decrease the value of that year for me in any way.”

- Andrew Vickers writes, “if my boss gives me a $1000 pay rise, whether I should be pleased or not depends on the standard deviation of salaries at my hospital, right?” (But we would be interested in the standard deviation of pay raises.)

- https://doi.org/10.1198/sbr.2010.10024

- Examples using Cohen’s d

- Do not report number needed to treat (NNT).

- Do not use two-stage analysis of cross-over designs.

- Do not adjust for simple carry-over.

- Do not use type III or Type I sums of squares.

- No one wants sequential sums of squares (Type I).

- Type III is either no different from the Type II and hence redundant or it is, because it adjusts an unbalanced main effect for an interaction. See chapter 14 of http://senns.uk/SIDD.html.

- Do not ignore the difference between blocks and treatments.

- Do not use global tests of significance.

- A test that assumes all levels are equal is rarely of interest. Instead, just estimate and show the specific contrasts between levels using appropriate confidence intervals.

- Do not attach a p-value to a descriptive summary.

- Descriptive summaries of a sample do not require information about statistical significance or p-values. Only when inference is made on the representative population is statistical significance relevant.

- Do not ignore covariates in a randomized controlled trial (RCT).

- Variable selection

- Do not use stepwise

- Do not use univariable screening

- Do not blindly use the word “normality” without a sentence or two that fully describes the context of the problem.

- I’ve never seen a client who understands this concept and regularly see other statisticians reinforce their misconceptions…

- In regression modeling, there isn’t concern about any variable being normal. However, the least important assumption of regression modeling does call for normality of the errors (residuals).

- Hypothesis tests for normality exist, however statisticians do not use them. Statisticians use diagnostic plots, spend a moment or two to consider any issues they might suggest, and then ignore these issues and proceed with the analysis :)

- https://doi.org/10.1186/1471-2288-12-81

- https://doi.org/10.1007/s00362-009-0224-x

- https://doi.org/10.7275/55hn-wk47

- Do not use significance testing in pilot studies.

- The purpose of pilot studies is to identify issues in all aspects of the study ranging from recruitment to data management and analysis. It isn’t concerned with making inference on population effects.

- https://doi.org/10.1136/bmj.i5239

- https://doi.org/10.1111/j.1752-8062.2011.00347.x

- Do not say the Wilcoxon test is about the median.

- Inferring anything about the median is only possible if the variables are sampled from population distributions which differ only in location, not shape or scale (which is unlikely).

- The Wilcoxon test is actually about the rank sums. Both variables can have the same 25th and 50th percentiles, yet if one has a larger 75th percentile then that may support the claim that distribution has a larger rank sum.

- https://doi.org/10.1080/00031305.2017.1305291

- Multiplicity adjustements are not necessary in exploratory studies.

- The goal of exploratory studies are to make sure we do not miss out on some potentially new and interesting finding. After observing this new finding, additional studies will need to take place to verify the results. Thus, we are not worried about making type I errors (false positives), but are worried about making type II errors (false negatives). By definition, adjusting for multiplicity increases the probability of making a type II errors to control the probability of making a type I error. Therefore multiplicity adjustments are not necessary for this study. (some caveats apply…)

- https://doi.org/10.1016/s0895-4356(00)00314-0

- https://doi.org/10.1016/j.athoracsur.2015.11.024

- https://doi.org/10.1097/00001648-199001000-00010

- https://doi.org/10.1111/brv.12315

- https://doi.org/10.1136/bmj.316.7139.1236

- https://doi.org/10.1016/j.actpsy.2014.02.001

- https://doi.org/10.1111/bju.14640

- https://doi.org/10.1186/1471-2288-2-8

- Do not use ROC optimal cutpoints.

- The sample size is often not even large enough for an accurate estimate of the population prevalence. If so, how would the data contain the information to choose the optimal cutpoint from all competing possibilities?

- ROC curves are based on sensitivity, \(P(\text{test positive} \mid \text{actual positive})\), and specificity, \(P(\text{test negative} \mid \text{actual negative})\). This means we condition on what is unknown to estimate what is known. How is this backwards reasoning relevant for individual predictions?

- ROC optimal cutpoints assume that sensitivity and specificity are constant for any given observation. However, just as the likelihood of one outcome varies from observation to observation, and is dependent upon selected predictor variables, sensitivity and specificity also vary from observation to observation and are dependent on covariates. For example, sensitivity increases along with any covariate related to outcome likelihood.

- https://doi.org/10.1002/pst.331

- https://doi.org/10.1002/sim.1611

- https://www.fharrell.com/post/backwards-probs/

- Do not use Yates’ continuity correction.

- Pearson’s asymptotic \(\chi^2\) test with Yates’ continuity correction is often the default statistic calculated in many software packages. However, Yates’ continuity correction is too conservative, similar to Fisher’s exact test.

- https://doi.org/10.1002/sim.4780090403

- https://doi.org/10.1002/sim.3531

- Do not dichotomize ordered/continuous variables.

Models

Theory

What is ordinary least squares (OLS)?

- Ordinary Least Squares (OLS) is an estimation method that corresponds to minimizing the sum of square differences between the observed and predicted values (SSE).

- The closed form solution is \(\hat{\beta} = (X^TX)^{-1} X^T Y\).

- Under the assumption that the linear model residuals are normally distributed, OLS and MLE estimators are equivalent.

- The Gauss-Markov theorem shows that the OLS estimates are the Best Linear Unbiased Estimators (BLUE) (minimum variance and unbiased) when the OLS model assumptions are satisfied (Errors have mean zero, uncorrelated, and homoscedastic. Normality of errors is not required.).

What is maximum likelihood estimation (MLE)?

- Maximum Likelihood Estimation (MLE) is an estimation method that corresponds to maximizing the likelihood function.

- The MLE estimates of the model coefficients are found through an iterative algorithm:

- Specify the statistical process (linear model) that relates the response to the predictors.

- Choose starting values for the coefficients

- Find the residual and likelihood score

- Propose new coefficient values that will improve the likelihood.

- Repeat until there is no improvement in the likelihood

- The cost function for MLE becomes minimization of the negative log-likelihood (NLL).

- The expectation maximization (EM) algorithm is a common MLE estimation method.

- Under the assumption that the linear model residuals are normally distributed, OLS and MLE estimators are equivalent.

What is Bayesian inference?

Bayesian inference can be thought of in the following three steps

- Creating the full probability model

- A joint probability model for all observable and unobservable quantities in a problem.

- Conditioning on observed data

- Calculate the posterior distribution (the conditional probability distribution of the unobserved quantities of interest, given the observed data)

- Evaluating the fit of the model and the implications of the posterior distribution.

- How well does the model fit the data?

- Are the conclusions reasonable?

- How sensitive are the results to the assumptions made in step 1?

The posterior distribution is formed using the combination of the likelihood and prior models:

- Posterior density

- \(p(\theta \mid D) = \frac{p(D \mid \theta)p(\theta)}{p(D)}\)

- Unnormalized posterior density

- \(\text{Posterior} \propto \text{Likelihood} \times \text{Prior}\)

- \(p(\theta \mid D) \propto L(\theta \mid D) p(\theta)\)

Above describes “full” Bayesian inference, where the distinction between various methods are defined as:

- MLE (maximum likelihood estimation)

- \(p(y \mid x;\theta)\), where \(\hat{\theta}_{MLE} = \text{argmax}_{\theta} p(D;\theta)\)

- Used for frequentist inference since it provides point-wise estimates of the model parameters.

- CIs and p-values are based on the idea of repeated sampling.

- MAP (maximum a posteriori)

- \(p(y \mid x,\theta)\), where \(\hat{\theta}_{MAP} = \text{argmax}_{\theta} p(\theta \mid D) \propto L(\theta \mid D)p(\theta)\)

- Partial? Bayesian inference since it only summarizes the posterior mode.

- Similar to MLE, but incorporates the prior distribution, thus creating a regularized maximum likelihood estimate.

- Full Bayesian inference

- \(p(y \mid x,D) = \int_{\theta}p(y \mid \theta, x, D)p(\theta \mid D)d\theta\)

- The full posterior distribution is used for inference.

- Conjugate prior relationships

- Creating the full probability model

What are common algorithms for optimization?

Most regression methods (e.g. non-OLS) do not have closed form (analytical) solutions for minimizing loss functions or maximization of likelihood functions (ideally convex, continuous, and differentiable functions). Various iterative algorithms are used to balance the ease of implementation, speed of convergence, numeric stability, and ability to find global maxima/minima from competing local points or singularities.

- First order (based on information from the first derivative)

- Gradient descent is a first order (derivative) method. There are many different forms of gradient descent algorithms. These different algorithms usually define new ways to estimate/modify the learning rate, add additional hyperparameters (that often need tuning…), or find minima using different sets of model parameters or subsets of the data to reduce complexity.

- It’s nice because it moves in the direction of highest improvement and will stop when it gets to a local optimum.

- Problems arise because the learning rate can be very small leading to long run-times. If the learning rate is too large, it may diverge out of control.

- Gradient descent is a first order (derivative) method. There are many different forms of gradient descent algorithms. These different algorithms usually define new ways to estimate/modify the learning rate, add additional hyperparameters (that often need tuning…), or find minima using different sets of model parameters or subsets of the data to reduce complexity.

- Second order (based on information from the second derivative)

- Newton’s method uses the first order derivative (gradient) and second order derivative (Hessian matrix) to approximate the objective function with a quadratic function. There are many different optimization algorithms that use Newton’s method.

- It’s nice because its speed of convergence is quadratic (very fast).

- Problems arise in high-dimensional models where modifications are needed to better handle saddle points. It is computationally expensive.

- See Newton-Raphson in logistic regression and L-BFGS

- Newton’s method uses the first order derivative (gradient) and second order derivative (Hessian matrix) to approximate the objective function with a quadratic function. There are many different optimization algorithms that use Newton’s method.

- Expectation Maximization

- EM iteration alternates between performing an expectation (E) step, which creates a function for the expectation of the log-likelihood evaluated using the current estimate for the parameters, and a maximization (M) step, which computes parameters maximizing the expected log-likelihood found on the E step. These parameter-estimates are then used to determine the distribution of the latent variables in the next E step.

- The MLE of a parameter can be found even in incomplete data problems (missing data, censored data, latent data).

- First order (based on information from the first derivative)

What are loss/cost/objective functions?

- Loss refers to the function defining goodness of fit applied to a single observation. For example, \(\text{loss function} = L(x_i, y_i)\). The regularization term may or may not be included?

- Cost refers to the function defining goodness of fit applied to the entire sample, and summarized as the average loss. For example, \(\text{cost function} = \frac{1}{n} \sum_{i=1}^{n}L(x_i, y_i)\). The regularization term may or may not be included?

- The objective function generally refers to the cost function including the regularization term if needed.

There are many different loss/cost/objective functions:

- Binary outcomes

- Log loss (Cross-entropy loss)

- \(L(y, p) = -[y \text{log}(p)+(1-y)\text{log}(1-p)]\), where \(y \in \{0,1\}\) and \(p=P(y=1)\).

- \(L(y, f(x)) = log(1 + e^{-yf(x)})\)

- Used in logistic regression

- Outputs well-calibrated probabilities

- Hinge loss

- Used in standard SVM models

- Exponential loss

- Adaboost loss

- Gini index

- 0-1 loss

- Log loss (Cross-entropy loss)

- Continuous outcomes

- Squared error loss

- \(L(y, f(x)) = (y - f(x))^2\)

- The loss function for OLS.

- Cost function is the mean squared error loss, AKA quadratic loss.

- Used for estimating the mean outcome, \(f(x) = E(Y \mid X=x)\).

- Advantages: Differentiable everywhere.

- Disadvantages: Sensitive to outliers.

- Absolute error loss

- \(L(y, f(x)) = |y - f(x)|\)

- Cost function is the mean absolute loss.

- Used for estimating the median outcome, \(f(x) = \text{median}(Y \mid X=x)\).

- Advantages: Not as sensitive as squared error loss.

- Disadvantages: Not differentiable at zero.

- Huber loss

\[\begin{equation}

L(y, f(x)) =

\begin{cases}

(y - f(x))^2 & \text{for } |y - f(x)| \leq \delta \\

2\delta |y - f(x)| - \delta^2 & \text{otherwise}

\end{cases}

\end{equation}\]

- Behaves like squared error loss when the loss is small and absolute error loss when the loss is large.

- Squared error loss

What is the likelihood function?

The Likelihood is a measure of the extent to which a sample provides support for each possible value of a parameter in a parametric model.

Given a probability density function \(f(x \mid \theta) = (\theta + 1)x^{\theta},\) the likelihood, \(L(\theta \mid x)\), is defined as \(L(\theta \mid x) = \prod_{i = 1}^{N}(\theta + 1)x_{i}^{\theta},\) and the corresponding log-likelihood, \(\Lambda(\theta \mid x)\), is defined as \(\Lambda(\theta \mid x) = \sum_{i = 1}^{N} \text{log}(\theta + 1) + \theta \text{log}(x_{i}).\)

If you want the maximum likelihood estimator (MLE), you need to find the maximum of the log-likelihood function: \(0 = \frac{\partial\Lambda(\theta \mid x)}{\partial\theta} = \sum_{i = 1}^{N} \frac{1}{\theta + 1} + \text{log}(x_{i}) = \frac{N}{\theta + 1} + \sum_{i = 1}^{N}\text{log}(x_{i})\)

What is the difference between a linear and nonlinear model?

A model is linear (or nonlinear) in its parameters. For example, \(y = \beta x^2 + \epsilon\) is linear in \(\beta\) but not in \(x\), while \(y = exp(\beta) x + \epsilon\) is nonlinear in \(\beta\) but linear in \(x\).

What is the difference between linear and generalized linear models?

- Linear model:

- Y, conditional on X, is normally distributed with a mean of the predicted values and some variance.

- \(Y \mid X \sim N(\beta X, \sigma^2)\)

- The error term \(\epsilon \sim N(0, \sigma^2)\)

- \(E(Y \mid X) = \beta X\)

- Fit with OLS

- Generalized linear model:

- Allows us to fit response variables that are from any exponential family (conditioning on the predictors).

- \(E(Y \mid X) = g^{-1}(\beta X)\)

- \(g(E(Y \mid X)) = \beta X\)

- \(g\) is the link function. The link function determines how the expected value of the response relates to the linear predictor.

- It also requires a variance function to determine how \(Var(Y)\) depends on the mean. \(Var(Y) = V(E(Y)).\)

- Fit with MLE

- Linear model:

What is the bias-variance tradeoff?

- Variance refers to the amount the predictions (i.e. the model) would change if the model was built using a different dataset.

- Bias refers to the error that is introduced by approximating a real life problem. The average error of a prediction.

- Noise is the inherent variability in the data.

Models that have high bias tend to have low variance. For example, simple linear regression. Models that have low bias tend to have high variance. For example, complex, flexible models. We want a model that is complex enough to capture the true relationship between the explanatory variables and the response variable, but not overly complex such that it finds patterns that don’t really exist.

You’ll commonly see the following relationship described for bias-variance decomposition. Given \(y=f(X)+\epsilon\) is the true data generating function and \(h(x) = wx+e\) is the sample fit to minimize squared error loss, we find that

\[\begin{equation} \begin{aligned} E\left[(y - h(x))^2\right] &= E\left[(h(x) - \bar{h}(x))^2\right] + (h(x) - f(x))^2 + E\left[(y - f(x))^2\right] \\ &= E\left[(h(x) - \bar{h}(x))^2\right] + (h(x) - f(x))^2 + Var(\epsilon) \\ &= \text{Variance + Bias}^2 \text{+ Noise} \\ &= \text{MSE}(h(x)) + \sigma^2 \\ &= \text{Expected prediction error} \\ &\approx CV(h(x)) \end{aligned} \end{equation}\]

Possible solutions for

- High variance

- More data

- Reduce model complexity

- Bagging

- regularization

- High bias

- Use a more complex model (more variables, interactions, nonlinearity)

- Boosting

What is regularization?

Regularization is the process of adding tuning parameters to a model to induce smoothness in order to prevent overfitting. This is most often done by adding a constant multiple to an existing weight vector. This constant is often the L1 (lasso), L2 (ridge), or the combination of L1 and L2 (elastic net). The model predictions should then minimize the loss function calculated on the regularized training set.

Regularization is synonymous with penalization. We regularize a model by penalizing estimates that would have otherwise violated some desirable behavior (e.g. sparsity)

What is sparsity?

A sparse statistical model is one in which only a relatively small number of parameters (or predictors) play an important role.

If p>N, and the true model is not sparse, then the number of samples, N, is too small to allow for accurate estimation of the parameters. But if the true model is sparse, so that only k<N parameters are actually nonzero in the true underlying model, then it turns out that we can estimate the parameters effectively using the lasso method. This is possible even though we are not told which k of the p parameters are actually nonzero.

References:

- Page 3, Statistical learning with sparsity by Hastie et al.

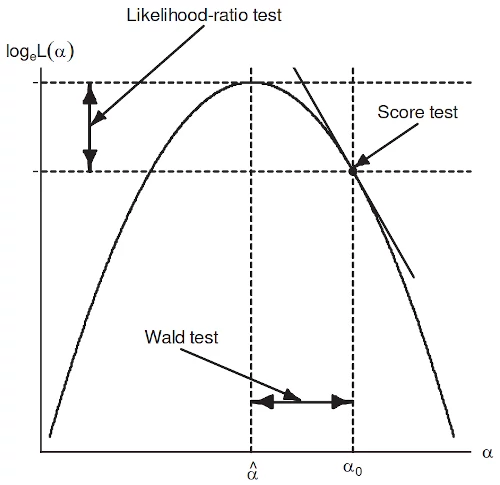

What’s the difference between likelihood ratio, Wald, and score tests?

The likelihood ratio test was developed by Jerzy Neyman and Egon Pearson (1928), the Wald test by Abraham Wald (1943), and the score test by C.R. Rao (1948) (Aitchison and Silvey (1958) developed the Lagrange Multiplier test which is equal to the score test).

The purpose of these tests is to compare two different (where one model is nested within the other) models to see which fits the data better. We assume the smaller model is true under the null hypothesis and that it is not the true model under the alternative. All three utilize the likelihood, though do so in different ways. The likelihood ratio test is the gold standard (most powerful), but the Wald and score tests may be computationally easier for certain model comparisons. The Wald and score tests generally perform poorly in small sample size scenarios. The Wald test is known to suffer from the Hauck-Donner effect.

- LRT: Fit both models and compare their likelihoods using \[\begin{align} LR &= -2log \left( \frac{L(model_1)}{L(model_2)}\right) \\ &= 2(logL(model_2) - logL(model_1)) \\ &\sim \chi^2_{df} \end{align}\] where \(model_1\) is the more restrictive model (fewer predictors; reduced), \(model_2\) the less restrictive model (more predictors; full), and the degrees of freedom for the test statistic is equal to the number of predictors included in \(model_2\) but not in \(model_1\). A small p-value indicates \(model_2\) is an improvement in fit.

- Wald: The Wald test only requires the larger model to be fit. It then tests how far the estimated coefficients are from zero (or whatever is used on the null hypothesis) in (asymptotic) standard errors and can allow multiple coefficients to be tested simultaneously. Thus we simply need to test that the parameters not included in the reduced model are simultaneously equal to zero. The resulting test statistic is also a \(\chi^2\) with degrees of freedom equal the number of predictors being tested. A small p-value indicates the larger model is an improvement in fit. Wald statistics are often the default values returned in statistical software because the standard error of the estimates (\(\sigma_{\hat{\beta}}\)) are available from the Hessian of the fitted model. The Z-test on \(\hat{\beta}/\sigma_{\hat{\beta}}\) provides p-values and normal confidence intervals.

- Score: The score test only requires the more restrictive model (reduced model). After fitting the model, the slope, or “score”, of the likelihood function (or log likelihood) can be evaluated at different values of coefficient values. If the score (slope) is very large, then we know that we are not close to the best value of the parameter, the MLE. The resulting test statistic is also a \(\chi^2\) with degrees of freedom equal the number of predictors being tested. A small p-value indicates the larger model is an improvement in fit.

References:

Ref: John Fox, Applied Regression Analysis

Ref: John Fox, Applied Regression AnalysisWhat does it mean to fit a multivariable model? (Frisch-Waugh-Lovell theorem)

Assumptions

What are the assumptions of linear regression?

Validity

The data collected maps correctly to the questions of interest. The response variable reflects the phenomenon of interest and all relevant predictors are included.

Additivity

The effects of the covariates on the response are additive, along with the additive error term.

Linearity

The response variable is a deterministic component of a linear function of the predictors (linear in the parameters) plus an error term. The conditional means of the response are a linear function of the predictor variables.

No multicolinearity

The predictor variables are not a linear combination of each other. The design matrix \(\mathbf{X}\) is full rank.

Independence of errors

The errors from the regression line are independent (uncorrelated). In other words, the observations are independent from one another.

Equal variance of errors

The errors from the regression line have similar variability across the range of the response.

\(E[\epsilon \mid X] = 0\)

The errors have mean 0. This is also known as the strict exogeneity assumption. Exogeneity is violated when a confounding variable is not included.

Normality of errors

Normality of errors provides finite-sample guarantees for hypothesis testing and equivalence between the OLS estimate and the MLE.

How can you validate the validity assumption of linear regression?

- Use expert’s knowledge to determine the functional relationship with the response.

- If a relevant predictor variable is omitted:

- The OLS estimator of the coefficients will be biased (if correlated with each other).

- The variance of the error term will be biased.

- The covariance matrix of the coefficients will be biased.

- If an irrelevant predictor variable is included:

- The OLS estimator of the coefficients are unbiased (As the unneeded coefficient should be 0).

- The covariance matrix of the coefficients will be biased (increased).

- Before estimating coefficients, predict the sign of them. If they are different than expected, you may have omitted something correlated with that predictor variable.

References:

How can you validate the linearity assumption of linear regression?

- The residual vs. predicted plot should be symmetrically distributed around a horizontal line. If this relationship drifts away from zero, then the conditional expected response isn’t linear in the fitted values.

- Each individual predictor vs. observed plot should be symmetrically distributed around a linear line.

How can you validate the multicolinearity assumption of linear regression?

- Check pairwise correlations of predictor variables.

- Check for instability in coefficients and standard error of those estimates.

- Check the variance inflation factors (VIF)

- A VIF of 1.9 tells you that the variance of a particular coefficient is 90% bigger than what you would expect if there was no multicollinearity.

- VIFs are calculated by taking a predictor, and regressing it against every other predictor in the model. This gives you the \(R^2\) values for each predictor. \(VIF = \frac{1}{1-R^2_i}\)

How can you validate the independence assumption of linear regression?

The residual plots should look as if they were random noise.

- residuals vs. observation order

- residuals vs. time

- residuals vs. response

- residuals vs. each predictor

- ACF plot

Otherwise, verify that each observation in the data is independent of others.

How can you validate the equal variance assumption of linear regression?

The residual vs. fitted values plot should show constant variability.

How can you validate the normality of errors assumption of linear regression?

The QQplot should show a slope of one. QQplots can compare the distribution for any two vectors of data. For regression models, we usually look at a plot with the Normal distribution theoretical quantiles on the x-axis and the sample (residual) quantiles on the y-axis.

How can you fix the equal variance assumption of linear regression?

- Identify source of variation in residuals with residual vs. predictors plots.

- Use a variance stabilizing transformation. (Log or square root transform the response)

- Use a weighted least squares or robust regression.

- Use a generalized least squares (GLS) model.

References:

How can you fix the independence assumption of linear regression?

A linear mixed model (LMM) or generalized least squares (GLS) model may be a good choice as they provide methods to fit correlated errors.

How can you fix the linearity assumption of linear regression?

- You can try to identify source of nonlinearity with response vs. predictor plots.

- Log transformations on the response or predictors variables.

- Interaction effects between predictor variables.

- Polynomial terms for predictors.

- Spline terms for predictors.

How can you fix the normality of errors assumption of linear regression?

- Try transformations on response. (Log or square root)

- Try generalized least squares (GLS) model

- Try generalized linear model (GLM) model

What are the assumptions of logistic regression?

Validity

The data collected maps correctly to the questions of interest. The response variable reflects the phenomenon of interest (binary) and all relevant predictors are included.

Linearity

The log odds of the outcome (logit of outcome) are linearly related to each predictor variable.

Independence of observations.

Multicolinearity

The predictor variables are not a linear combination of each other.

What are the assumptions of Cox proportional hazards regression?

Validity

The data collected maps correctly to the questions of interest. The response variable reflects the phenomenon of interest (time to event or censoring) and all relevant predictors are included.

Linearity

The log hazard (or log cumulative hazard) of the outcome is linearly related to each predictor variable.

No Multicolinearity

The predictor variables are not a linear combination of each other.

Independence of observations

No event time bias

Each predictor variable is measured at or before baseline event time. When predictors, which are not a constant property of the observation, are measured after start of event time, then the observation had to remain event free until the time they were measured on that variable. This potential bias is known as event time bias (survival time bias or immortal time bias).

No informative censoring bias

This is generally defined by independence of censoring. subjects in subgroup \(g\) censored at time \(t\) should be representative of all subjects in subgroup \(g\) who remained at risk at time \(t\). i.e. random censoring conditional on each covariate.

Proportional hazards

There are no time by predictor interactions. The predictors have the same effect on the hazard function at all time points. The treatment effect should have the same relative difference in hazard compared to the control effect at all time points (their curves should not cross).

Specification

What conditions are required for causal interpretations in observational studies?

The conditions required for a causal analysis or conceptualization as a randomized experiment are:

- Consistency

- The values of treatment under comparison correspond to well-defined interventions that, in turn, correspond to the versions of treatment in the data.

- Exchangeability

- The conditional probability of receiving every value of treatment, though not decided by the investigators, depends only on measured covariates.

- Randomization produces exchangeability.

- Exogeneity is commonly used as a synonym for exchangeability.

- The treated, had they remained untreated, would have experienced the same average outcome as the untreated did, and vice versa.

- Positivity

- Each treatment value (treated vs. untreated) has a non-zero probability of being assigned, conditional on measured covariates, for each subject in a study. i.e. the probability is positive.

These conditions, and the specific functional form for identifiable effects, are usually untestable and can only be discussed with subject-matter knowledge and DAGs to justify the choices made.

Model specification can be performed under three general methods of inference:

- Description: How have things been?

- Prediction: What will happen next?

- Counterfactual/Causal: If we do X, what would happen to Y?

References:

- Consistency

What are DAGs?

DAGs (directed acyclic graphs) are useful tools to define the relationships between a set of covariates, exposure, and outcome variables. By making the structure and assumptions explicit, we can demonstrate whether the causal effect of the predictor of interest on the outcome is identifiable and, if so, which variables are required to ensure conditional exchangeability.

- Directed: points from cause to effect.

- Acyclic: no directed path can form a closed loop.

Common terminology used for DAG variables:

- X: Exposure, Treatment, Intervention, Primary Predictor, Counterfactual

- Y: Outcome, Response, Target

- Z: Confounder, Covariate, Secondary Exposure

- U: Unmeasured, Unobserved

References:

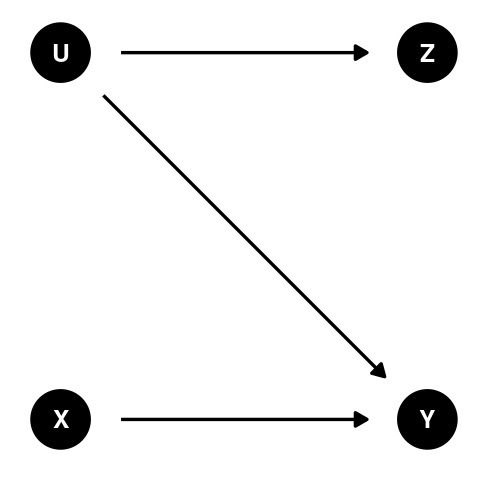

What is the backdoor criterion?

A set of covariates satisfy the backdoor criterion if all backdoor paths between the exposure and outcome are blocked by conditioning on the covariates and the covariates contain no variables that are descendants of the exposure. Conditional exchangeability is guaranteed if the backdoor criterion is satisfied.

The backdoor criterion is satisfied in two settings:

- No common causes of exposure and outcome.

- This means no backdoor paths need to be blocked. This is because the set of variables that satisfy the backdoor criterion is the empty set.

- This is representative of the marginally randomized experiment.

- There are no backdoor paths from the exposure to the outcome.

- Common causes of exposure and outcome but a subset of covariates that are non-descendants of the exposure suffice to block all backdoor paths.

- No unmeasured confounding.

- This is representative of the conditionally randomized experiment.

- Measured covariates are sufficient to block all backdoor paths from the exposure to the outcome

References:

- No common causes of exposure and outcome.

What is a backdoor path?

A backdoor path is a non-causal path from an exposure variable to an outcome variable. In other words, if we removed the direct paths that flowed from the exposure to the outcome, are there any other lines left that connects these two variables? Or, the path from the exposure to the outcome that links the exposure and outcome through their common cause Z.

Backdoor paths bias the effect estimates that we want (total or direct effect), thus we wish to create a model that blocks backdoor paths. Backdoor paths are blocked when we adjust for confounders or we do not adjust for colliders.

References:

What is a confounding variable?

Confounding is systematic error introduced into a statistical model when a third variable interferes with the exposure (predictor variable of interest) and outcome variables.

Covariates included in the model should be

- Associated with the predictor of interest.

- Cause the outcome (the covariate influences the outcome and not the other way around).

- Not located between the exposure and outcome on the causal pathway.

For example:

- Bad: exposure -> covariate -> outcome

- Good: covariate -> exposure -> outcome

- Consider a study about grades and alertness in class. Amount of sleep is associated with alertness and also could be regarded as a causal influence on grades. Thus amount of sleep is a good (confounding) covariate to adjust for.

- Amount of sleep (confounder) -> alertness (predictor) -> grades (outcome)

So the steps to follow are:

- Identify variables which may cause the outcome.

- Identify the variables in step 1 that are associated with the exposure.

- Make sure the variables in step 2 are not on the causal pathway (the exposure is not causing the covariate)

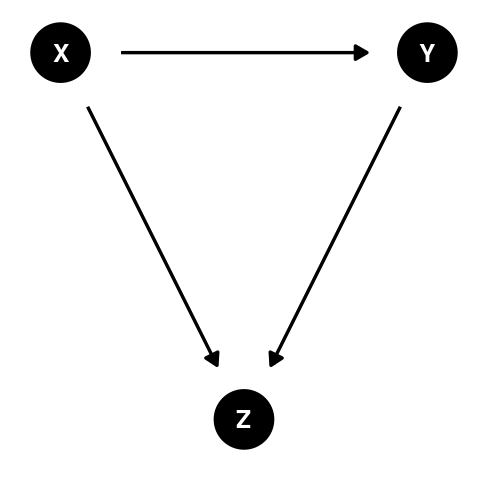

What is a collider variable?

Collider bias occurs when two variables (the outcome and exposure) independently cause a third variable (a covariate) and this third variable is included in the model. Controlling for a collider will open the backdoor path, thereby introducing bias.

Collider’s are path specific. The path from the exposure to the outcome must have a third variable in the middle with arrows (one from the exposure side and one from the outcome side) pointing to it.

References:

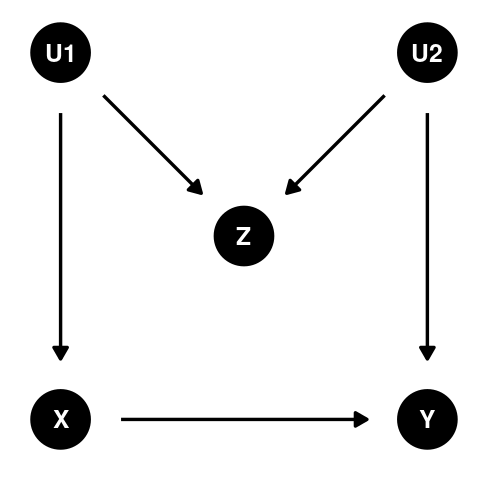

What is M-bias?

M-bias (named after the shape of the DAG) is caused by adjusting for a third covariate which is directly caused by two (potentially unobserved) ancestors of the exposure and outcome, thereby turning it into a collider [1]. Selection bias is a common example leading to M-bias.

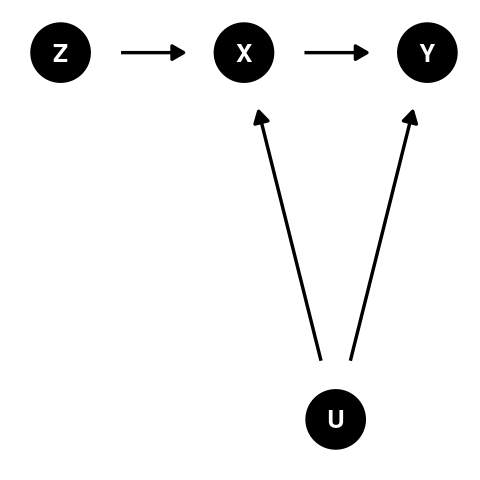

What is an instrumental variable?

Let \(y = bx + u\) where X and Y are observed variables and U is an error term. If X and U are correlated, then \(b\) cannot be estimated without bias. However, if there was a third observed variable, Z, that was correlated with X and uncorrelated with U, then \(b = \frac{r_{yz}}{r_{xz}}\).

For example, in a clinical trial, Z might be the treatment assigned to a subject, X is the treatment actually received, and Y is the outcome.

References:

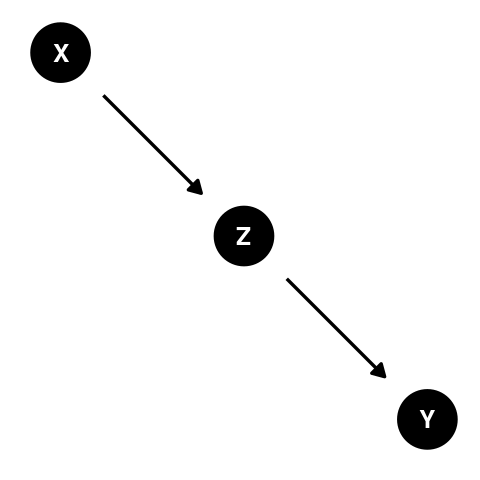

What is mediation?

A variable (Z) is a mediator if it lies between an exposure (X) and the outcome (Y) on the causal path: X -> Z -> Y.

- The indirect effect of X on Y is the product of the coefficients from X to Z and from Z to Y. In other words, the change in Y when Z increases by one unit and X remains constant.

- The direct effect of X on Y is the coefficient of the path from X to Y only. In other words, the change in Y when X increases by one unit and Z remains constant.

- The total effect of X on Y is the sum of the indirect and direct effects.

Mediation analysis traditionally follows from the paper by Baron and Kenny (1986) [1]:

- Verify that X is a significant predictor of Y:

lm(Y ~ X). If it is not, no ground for mediation. - Verify that X is a significant predictor of Z:

lm(Z ~ X). If it is not, no ground for mediation. - Verify that Z is a significant predictor of Y and the coefficient estimate for X is smaller in absolute value.

lm(Y ~ X + Z) - If the effect of X on Y completely disappears, Z fully mediates between X and Y (full mediation). If the effect of X on Y still exists, but in a smaller magnitude, Z partially mediates between X and Y (partial mediation). If the effect of X on Y is just as larger or larger, Z does not mediate between X and Y.

- Use Preacher and Hayes (2004) [2] bootstrapping method to generate confidence intervals on the mediation effect. ACME is the indirect effect of Z (total effect - direct effect) and tells us if our mediation effect is significant.

library(mediation); mediate()

(Review modern thoughts on this method)

What is moderation?